I've found myself in academia in romantic pursuit of some sort of shared knowledge about human cognition & experience.

|

| ||||||

A brief selection of my research projects

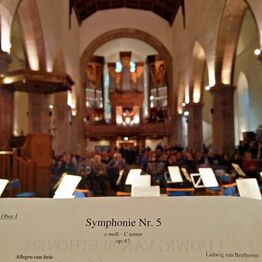

Music Cognition

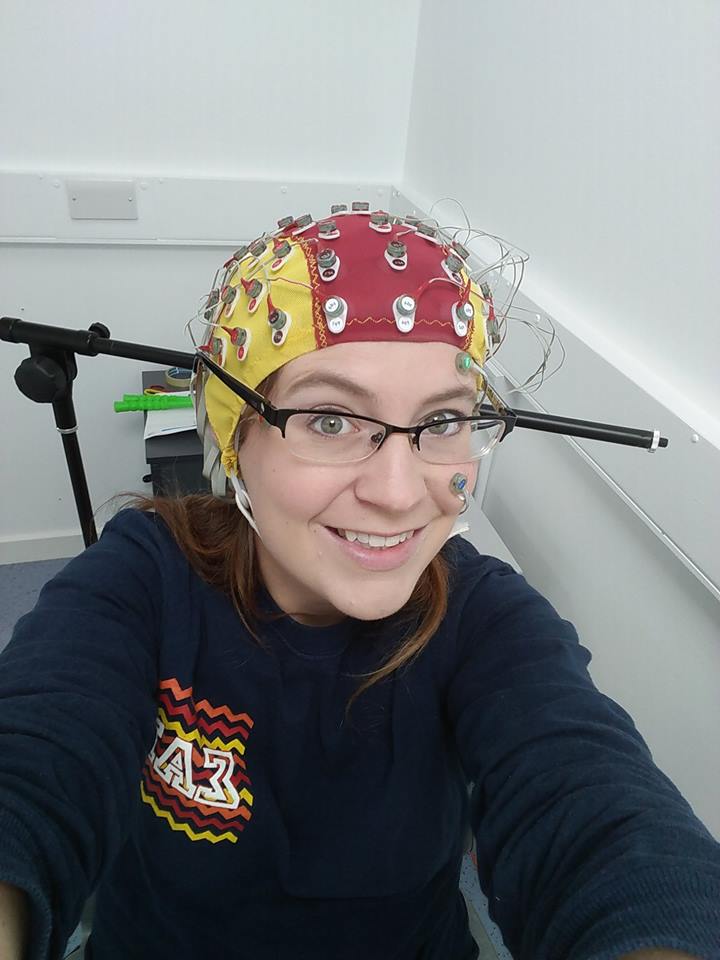

As a member of the Sensorimotor Neuroscience lab at UC Merced, I have researched how the brain processes rhythm -- the regular organization of events in time -- when we listen to music. The perception of musical rhythm relies on the interaction of motor, parietal, and auditory regions of the brain within a pathway called the dorsal auditory stream. This pathway enables predictions from the motor cortex to travel via the parietal cortex and inform auditory regions where in time the next musical beat will land, according to the Action Prediction for Auditory Simulation (ASAP) hypothesis (Patel & Iversen, 2014). Using non-invasive transcranial magnetic stimulation (TMS), I have temporarily down-regulated neural activity in this pathway to probe the causal role of the motor system in musical beat perception. Specifically, we have replicated that interfering with the parietal cortex impairs some aspects of beat-based musical timing perception, but these effects may be lateralized to the left hemisphere (Ross, Proksch, Iversen & Balasubramaniam, in review).

In my publication "Motor and Predictive Processes in Auditory Beat and Rhythm Perception," I have argued that cortical networks proposed by the ASAP hypothesis, in conjunction with subcortical networks proposed by the Gradual Audiomotor Evolution hypothesis (Merchant & Honing, 2014), provide a converging and more complete description of the role of the motor system in musical timing perception (Proksch, Comstock, Médé, Pabst, & Balasubramaniam, 2020). Additionally, these hypotheses can be integrated under the Active Inference framework of sensory processing, which posits that action and sensation are not two separable processes, but rather that the brain+body system actively solicits internal predictive models of incoming sensory stimuli in an ongoing process of prediction error minimization (Adams, Shipp & Friston, 2013).

Bridging the divide between my work on music cognition and my work on interpersonal coordination, I have collaborated with an interdisciplinary team of international scholars to characterize the "Multilevel Rhythms in Multimodal Communication" (Pouw, Proksch, et al, 2021). In this publication, we review how multiple levels of communication, from neural, bodily, and social interactions -- each which their own multimodal signals-- contributes to to the complex rhythms of human and non-human animal communication.

Refs:

As a member of the Sensorimotor Neuroscience lab at UC Merced, I have researched how the brain processes rhythm -- the regular organization of events in time -- when we listen to music. The perception of musical rhythm relies on the interaction of motor, parietal, and auditory regions of the brain within a pathway called the dorsal auditory stream. This pathway enables predictions from the motor cortex to travel via the parietal cortex and inform auditory regions where in time the next musical beat will land, according to the Action Prediction for Auditory Simulation (ASAP) hypothesis (Patel & Iversen, 2014). Using non-invasive transcranial magnetic stimulation (TMS), I have temporarily down-regulated neural activity in this pathway to probe the causal role of the motor system in musical beat perception. Specifically, we have replicated that interfering with the parietal cortex impairs some aspects of beat-based musical timing perception, but these effects may be lateralized to the left hemisphere (Ross, Proksch, Iversen & Balasubramaniam, in review).

In my publication "Motor and Predictive Processes in Auditory Beat and Rhythm Perception," I have argued that cortical networks proposed by the ASAP hypothesis, in conjunction with subcortical networks proposed by the Gradual Audiomotor Evolution hypothesis (Merchant & Honing, 2014), provide a converging and more complete description of the role of the motor system in musical timing perception (Proksch, Comstock, Médé, Pabst, & Balasubramaniam, 2020). Additionally, these hypotheses can be integrated under the Active Inference framework of sensory processing, which posits that action and sensation are not two separable processes, but rather that the brain+body system actively solicits internal predictive models of incoming sensory stimuli in an ongoing process of prediction error minimization (Adams, Shipp & Friston, 2013).

Bridging the divide between my work on music cognition and my work on interpersonal coordination, I have collaborated with an interdisciplinary team of international scholars to characterize the "Multilevel Rhythms in Multimodal Communication" (Pouw, Proksch, et al, 2021). In this publication, we review how multiple levels of communication, from neural, bodily, and social interactions -- each which their own multimodal signals-- contributes to to the complex rhythms of human and non-human animal communication.

Refs:

- Aniruddh D Patel and John R Iversen. The evolutionary neuroscience of musical beat perception: the action simulation for auditory prediction (ASAP ) hypothesis. Frontiers in systems neuroscience, 8:57, 2014.

- Jessica M Ross*, Shannon Proksch*, John R Iversen, and Ramesh Balasubramaniam. Left hemisphere dominance in the dorsal auditory stream for musical beat phase timing perception. in review, 2021.

- Shannon Proksch, Daniel Comstock, Butovens Médé, Alexandria Pabst, and Ramesh Balasubramaniam. Motor and predictive processes in auditory beat and rhythm perception. Frontiers in Human Neuroscience, Cognitive Neuroscience, 2020.

- Hugo Merchant and Henkjan Honing. Are non-human primates capable of rhythmic entrainment? evidence for the gradual audiomotor evolution hypothesis. Frontiers in neuroscience, 7:274, 2014.

- Rick A Adams, Stewart Shipp, and Karl J Friston. Predictions not commands: active inference in the motor system. Brain Structure and Function, 218(3):611–643, 2013

- Wim Pouw, Shannon Proksch, Linda Drijvers, Marco Gamba, Judith Holler, Christopher Kello, Rebecca S. Schaefer, and Geraint A. Wiggins. Multilevel rhythms in multimodal communication. Philosophical Transactions of the Royal Society B 376, no. 1835 20200334, 2021

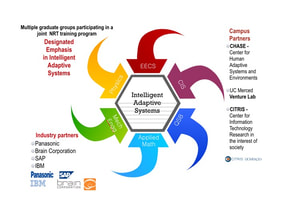

Intelligent Adaptive Systems - Interpersonal Coordination

In temporal rhythmic processing, the interaction of the body, brain, and environment result in an emergent phenomenon of sensorimotor and neural entrainment (Ross and Balasubramaniam, 2014). This emergent phenomenon is extended over multiple bodies and brains when the movement dynamics of one individual becomes causally coupled with the movement dynamics of another (or multiple) individual(s), forming an interpersonal synergy through their mutual interaction (Riley, Richardson, Shockley, and Ramenzoni 2011). Measurement of interpersonal synergy can be indicative of shared social cognition: of joint participation in co-regulating multiple patterns of activity between two or more agents engaged in a social interaction(De Jaegher, Di Paolo, Gallagher, 2010). Ideally, in the controlled setting of a lab, we can measure when an interpersonal synergy develops by correlating individual signals recorded from each individual within a group social interaction, e.g. electrodermal activity, movement dynamics, speech signals, or even neural activity. However, individual measurements such as these may not always be available or easy to obtain in very large, naturalistic social interactions. In my role as a National Science Foundation Research Trainee on Intelligent Adaptive Systems, I have applied tools from dynamical systems theory to evaluate the coordination dynamics of large, multi-agent groups of people. I have shown that Recurrence Quantification Analysis (RQA), when applied to only a single aggregate measurement, can reveal coordination patterns that differ during independent behavior, and after the formation of a single complex system during interdependent interaction (Proksch, Reeves, Spivey and Balasubramaniam, 2022). Moreover, conceptualizing interacting groups of humans as engaged in collective synergies helps to shed light on interpersonal behavioral dynamics as "emerging from groups of agents who are embodied and enactive, as well as embedded in an environment, thus making their cognition extended across many interacting elements" co-creating a shared acoustic social world (Proksch, Reeves, Spivey and Balasubramaniam, forthcoming).

Refs:

In temporal rhythmic processing, the interaction of the body, brain, and environment result in an emergent phenomenon of sensorimotor and neural entrainment (Ross and Balasubramaniam, 2014). This emergent phenomenon is extended over multiple bodies and brains when the movement dynamics of one individual becomes causally coupled with the movement dynamics of another (or multiple) individual(s), forming an interpersonal synergy through their mutual interaction (Riley, Richardson, Shockley, and Ramenzoni 2011). Measurement of interpersonal synergy can be indicative of shared social cognition: of joint participation in co-regulating multiple patterns of activity between two or more agents engaged in a social interaction(De Jaegher, Di Paolo, Gallagher, 2010). Ideally, in the controlled setting of a lab, we can measure when an interpersonal synergy develops by correlating individual signals recorded from each individual within a group social interaction, e.g. electrodermal activity, movement dynamics, speech signals, or even neural activity. However, individual measurements such as these may not always be available or easy to obtain in very large, naturalistic social interactions. In my role as a National Science Foundation Research Trainee on Intelligent Adaptive Systems, I have applied tools from dynamical systems theory to evaluate the coordination dynamics of large, multi-agent groups of people. I have shown that Recurrence Quantification Analysis (RQA), when applied to only a single aggregate measurement, can reveal coordination patterns that differ during independent behavior, and after the formation of a single complex system during interdependent interaction (Proksch, Reeves, Spivey and Balasubramaniam, 2022). Moreover, conceptualizing interacting groups of humans as engaged in collective synergies helps to shed light on interpersonal behavioral dynamics as "emerging from groups of agents who are embodied and enactive, as well as embedded in an environment, thus making their cognition extended across many interacting elements" co-creating a shared acoustic social world (Proksch, Reeves, Spivey and Balasubramaniam, forthcoming).

Refs:

- Jessica M Ross and Ramesh Balasubramaniam. Physical and neural entrainment to rhythm: human sensorimotor coordination across tasks and effector systems. Frontiers in human neuroscience, 8:576, 2014.

- Michael A Riley, Michael Richardson, Kevin Shockley, and Verónica C Ramenzoni. Interpersonal synergies. Frontiers in psychology, 2:38, 2011.

- Hanne De Jaegher, Ezequiel Di Paolo, and Shaun Gallagher. Can social interaction constitute social cognition? Trends in cognitive sciences, 14(10):441–447, 2010.

- Shannon Proksch, Majerle Reeves, Michael Spivey, & Ramesh Balasubramaniam. Coordination Dynamics of Multi-Agent Human Interaction in a Musical Ensemble. Scientific Reports. 12, 421, 2022.

- Shannon Proksch, Majerle Reeves, Michael Spivey, & Ramesh Balasubramaniam. Measuring acoustic social worlds: reflections on a study of multiagent human interaction. In S. Besser, F. Lysen, N. Geode (Eds.) Worlding the Brain, forthcoming with Brill in 2022.

Interoceptive Inference and Emotion

How and what emotional content is communicated or evoked by music constitutes a central question for music cognition. An explanation of the physical processes underlying music-related emotion must appeal to more than extramusical associations and violation of musical features in the external auditory signal. A thorough account of music-related emotion must also take into account the physiological processes involved in the affective experience of the listener/performer.

Refs:

How and what emotional content is communicated or evoked by music constitutes a central question for music cognition. An explanation of the physical processes underlying music-related emotion must appeal to more than extramusical associations and violation of musical features in the external auditory signal. A thorough account of music-related emotion must also take into account the physiological processes involved in the affective experience of the listener/performer.

- The Predictive Processing framework provides an explanation of the privileged role of expectation in action and perception, and active interoceptive inference specifically provides further emphasis on the physiological homeostatic drive underpinning emotional experience. PP importantly lacks a detailed description of the neural and physiological mechanisms enabling these homeostatic processes, and needs to go beyond explanations of mere positive or negative affective valence.

- The Quartet Theory of Emotion, as proposed by Koelsch et al. (2015) provides a detailed neural mechanism which takes into account how the brain and associated biological systems actually effect homeostatic maintenance and generate various aspects of in emotional experience, with the potential to address limitations within PP accounts of emotion and to further expand the framework of predictive processing as a whole.

Refs:

- Stefan Koelsch, Arthur M. Jacobs, Winfried Menninghaus, Katja Liebal, Gisela Klann-Delius, Christian von Scheve, Gunter Gebauer. “The Quartet Theory of Human Emotions: An Integrative and Neurofunctional Model.” Physics of Life Review 13: 1–27, 2015.

- Shannon Proksch, 2017. Interoceptive inference and emotion in music: Integrating the neurofunctional ‘Quartet Theory of Emotion’ with predictive processing in music-related emotional experience. Journal of Cognition and Neuroethics, 5(1): 101-125, 2017

Sometimes I talk about my work on social media

|

|